TESTING TOOLS

TESTING TOOLS Docker: Complete Guide to Containerization Technology

What is Docker? Demystifying Containerization

The Core Concept

Docker is a containerization platform that packages your applications and all their dependencies into lightweight, portable containers. Think of it as a shipping container for your software—just as physical shipping containers revolutionized global trade by standardizing how goods are transported, Docker containers standardize how applications are packaged and deployed.

But what exactly is a container? Imagine you're moving to a new apartment. Instead of packing each item separately and hoping everything fits in your new space, you pack everything into standardized boxes that can be moved anywhere and will work the same way regardless of the destination. That's essentially what Docker does for your applications.

Breaking Down the Container vs. Virtualization Debate

When I first encountered Docker, I thought it was just another virtualization technology. I couldn't have been more wrong. Traditional virtual machines create complete copies of entire operating systems, which is like renting separate apartments for each of your projects—expensive and resource-heavy.

Docker containers, on the other hand, share the host operating system's kernel while maintaining complete isolation between applications. It's more like having separate rooms in the same building—each room is completely private and secure, but they all share the same foundation infrastructure.

The Docker Architecture That Powers It All

Docker operates on a client-server architecture that consists of three main components:

Docker Engine: The runtime that manages containers on your system Docker Client: The command-line interface you use to interact with Docker Docker Registry: The cloud-based repository where Docker images are stored and shared.

This architecture allows you to build an image once and run it anywhere Docker is installed, whether that's your local development machine, a staging server, or a production cluster running thousands of containers.

The Container Revolution That Changed Everything

Picture this: It's 2 AM, and you're frantically debugging why your application works perfectly on your laptop but crashes spectacularly on the production server. Sound familiar? If you've been in software development for more than five minutes, you've probably lived through this nightmare scenario.

After spending countless nights wrestling with environment inconsistencies, dependency conflicts, and the infamous "but it works on my machine" syndrome, I discovered Docker. What started as curiosity about this "containerization thing" everyone was talking about turned into a complete transformation of how I approach software deployment.

Docker has revolutionized how we build, ship, and run applications. With over 79% of organizations now using containerization technology, it's clear that Docker isn't just a trend—it's become an essential tool in modern software development. Whether you're a solo developer tired of environment headaches or part of a team struggling with deployment consistency, this comprehensive guide will walk you through everything you need to master Docker.

What you'll discover:

- The fundamental concepts that make Docker so powerful

- Practical implementation strategies I've learned through real projects

- Step-by-step tutorials to get you running containers in minutes

- Real-world use cases that will transform your development workflow

Why Docker Matters: The Game-Changing Benefits

🔄 Consistency Across Environments

The most immediate benefit I experienced with Docker was the end of environment-related surprises. Remember those deployment disasters caused by different versions of Node.js, Python, or system libraries? Docker eliminates these issues entirely.

When you containerize your application, you're packaging not just your code, but the exact runtime environment it needs to function. The container that runs on your laptop is identical to the one running in production—same operating system version, same dependencies, same everything.

⚡ Resource Efficiency That Actually Matters

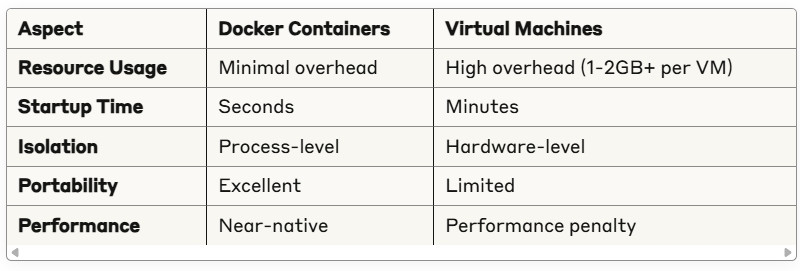

Traditional virtual machines typically consume 1-2GB of RAM just for the operating system, before your application even starts. Docker containers share the host OS kernel, using only the resources your application actually needs.

In my experience, I've seen applications that required 4GB of memory in a VM run comfortably in 500MB containers. This isn't just about saving money on cloud bills—it's about being able to run more services on the same hardware and achieving better performance.

📈 Scalability and Microservices Made Simple

Docker containers are perfect building blocks for microservices architecture. Each service runs in its own container, can be scaled independently, and communicates through well-defined APIs.

I've worked on projects where we scaled individual components from 1 to 100 instances in minutes during traffic spikes, all because Docker makes it trivial to spin up new container instances. The granular control you get over each service is transformative for application architecture.

🚀 DevOps Integration That Actually Works

Docker integrates seamlessly into CI/CD pipelines. You can build your container image once, test it in exactly the same environment it will run in production, and deploy with confidence.

The automation possibilities are endless. I've set up pipelines that automatically build, test, and deploy applications across multiple environments without any manual intervention. The consistency Docker provides makes this level of automation not just possible, but reliable.

💰 Cost Optimization Through Efficiency

Beyond the obvious infrastructure savings from better resource utilization, Docker reduces operational costs in ways you might not expect. Faster deployment cycles mean less developer time spent on deployment issues. Consistent environments mean less time debugging environment-specific problems.

Docker vs. The Competition: Making the Right Choice

Docker vs. Virtual Machines: The Definitive Comparison

Virtual machines still have their place for complete OS isolation or when you need to run different operating systems. But for application deployment and development environment consistency, containers are the clear winner.

Docker vs. Alternative Container Technologies

While Docker pioneered containerization, alternatives like Podman and containerd have emerged. Podman offers rootless containers and doesn't require a daemon, which appeals to security-conscious organizations. However, Docker's ecosystem, documentation, and community support remain unmatched.

For most developers and organizations, Docker provides the best balance of features, stability, and community support. The learning curve and tooling maturity make it the pragmatic choice for getting started with containerization.

Getting Started with Docker: Your First Steps

Installation Made Simple

Getting Docker running on your system is surprisingly straightforward, regardless of your operating system.

For Windows and Mac users, Docker Desktop provides the easiest installation experience. It includes everything you need: the Docker Engine, CLI tools, and a helpful GUI for managing containers.

For Linux users, you can install Docker directly through your distribution's package manager. The process varies slightly between distributions, but the end result is the same: a powerful containerization platform at your fingertips.

Your First Docker Container

Once Docker is installed, let's verify everything works with the traditional "Hello World" example:

bash

docker run hello-world

This simple command does something remarkable behind the scenes:

- Docker checks if the "hello-world" image exists locally

- If not found, it downloads the image from Docker Hub

- Creates a new container from that image

- Runs the container and displays its output

- The container exits after completing its task

Essential Docker Commands Every Developer Needs

After years of working with Docker, these are the commands I use daily:

Managing Images:

bash

# Pull an image from Docker Hub

docker pull nginx:latest

# List all local images

docker images

# Remove an image

docker rmi image-name

Working with Containers:

bash

# Run a container in detached mode

docker run -d --name my-app nginx

# List running containers

docker ps

# Stop a running container

docker stop my-app

# Remove a container

docker rm my-app

Building Custom Images:

bash

# Build an image from a Dockerfile

docker build -t my-custom-app .

# Run your custom container

docker run -p 8080:80 my-custom-app

Understanding Docker Images and Layers

Docker images are built in layers, like an onion. Each instruction in your Dockerfile creates a new layer. This layered approach is brilliant for several reasons:

- Efficiency: Layers are cached and reused across images

- Speed: Only changed layers need to be rebuilt

- Storage: Common layers are shared between images

When you modify your application code, only the layers containing your changes need to be rebuilt, making the build process incredibly fast for iterative development.

Container Lifecycle and Data Persistence

Containers are ephemeral by design—when they stop, any data created inside them disappears. For applications that need to persist data, Docker provides volumes and bind mounts.

bash

# Create a volume for persistent data

docker volume create my-data

# Run a container with mounted volume

docker run -v my-data:/app/data my-application

This separation between application logic and data storage is one of Docker's most powerful features, enabling true stateless applications.

Docker in Practice: Real-World Applications

Web Application Deployment Revolution

The most common use case I've encountered is deploying web applications. Instead of installing Node.js, Python, or PHP directly on servers, you package everything into containers.

Here's a typical multi-tier application setup I've used repeatedly:

- Frontend: React application in an nginx container

- Backend: Node.js API in a custom container

- Database: PostgreSQL in its official container

- Cache: Redis container for session storage

Each component runs independently, can be scaled separately, and can be updated without affecting the others. This modular approach transforms how you think about application architecture.

Microservices Architecture Enablement

Docker containers are perfect building blocks for microservices. In a recent project, we broke down a monolithic application into 12 separate services, each running in its own container.

The benefits were immediate:

- Independent deployments: Update one service without touching others

- Technology diversity: Use Python for ML services, Node.js for APIs, Go for performance-critical components

- Fault isolation: One service failure doesn't bring down the entire system

- Team autonomy: Different teams can own different services completely

Development Environment Standardization

One of Docker's most underappreciated benefits is standardizing development environments. How many times have you heard "it works on my machine" or spent hours helping a new team member set up their development environment?

With Docker, new developers can be productive in minutes:

bash

# Clone the repository

git clone project-repo

# Start the entire development stack

docker-compose up

# Everything is running and ready for development

No more installation guides, no more version conflicts, no more "my local setup is different" excuses.

CI/CD Pipeline Integration

Docker transforms continuous integration and deployment pipelines. Instead of deploying code and hoping the production environment is configured correctly, you deploy the exact same container that passed all your tests.

My typical CI/CD pipeline looks like this:

- Code commit triggers the pipeline

- Build the Docker image with the new code

- Test using the same container image

- Deploy the tested image to production

- Monitor and rollback if needed

The consistency this provides is game-changing for deployment confidence.

Docker Best Practices and Security Essentials

Image Optimization Strategies

After building hundreds of Docker images, I've learned that image size matters. Smaller images mean faster deployments, reduced storage costs, and improved security through a smaller attack surface.

Multi-stage builds are your secret weapon:

dockerfile

# Build stage

FROM node:16 AS builder

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build

# Production stage

FROM node:16-alpine

COPY --from=builder /app/dist /app

EXPOSE 3000

CMD ["node", "server.js"]

This approach separates build dependencies from runtime dependencies, often reducing final image size by 70-80%.

Security Considerations That Matter

Container security isn't optional—it's essential. The practices I follow religiously:

Use official base images whenever possible. They're maintained by the software vendors and receive regular security updates.

Run containers as non-root users. This simple change prevents many potential security issues:

dockerfile

RUN addgroup -g 1001 -S nodejs

RUN adduser -S nextjs -u 1001

USER nextjs

Scan images for vulnerabilities before deployment. Many container registries provide built-in vulnerability scanning.

Keep images updated. Regularly rebuild and redeploy images to get the latest security patches.

Production Deployment Guidelines

Running containers in production requires additional considerations beyond development. Resource limits prevent containers from consuming excessive system resources:

bash

docker run --memory="512m" --cpus="1.0" my-application

Health checks ensure your application is truly ready to serve traffic:

dockerfile

HEALTHCHECK --interval=30s --timeout=3s --start-period=5s --retries=3 \

CMD curl -f http://localhost:3000/health || exit 1

Advanced Docker Concepts for Power Users

Docker Compose: Orchestrating Multi-Container Applications

Docker Compose transforms complex multi-container setups into simple, declarative configurations. Instead of running multiple docker commands, you define your entire application stack in a single file:

yaml

version: '3.8'

services:web:build: .ports:- "8000:8000"depends_on:- dbdb:image: postgres:13environment:POSTGRES_PASSWORD: secret

With one command—docker-compose up—your entire application stack is running and ready.

Container Orchestration Introduction

While Docker Compose works well for single-host deployments, production applications often require orchestration across multiple hosts. Kubernetes has become the de facto standard for container orchestration, providing features like:

- Automatic scaling based on resource usage

- Load balancing across multiple container instances

- Rolling updates with zero downtime

- Service discovery and networking

Docker Swarm provides a simpler alternative for smaller deployments, offering basic orchestration features without Kubernetes' complexity.

Networking and Storage Deep Dive

Docker's networking capabilities extend far beyond basic port mapping. Custom networks enable complex application architectures:

bash

# Create a custom network

docker network create app-network

# Run containers on the same network

docker run --network app-network --name api my-api

docker run --network app-network --name frontend my-frontend

Containers on the same network can communicate using container names as hostnames, simplifying service discovery.

For storage, Docker provides multiple options:

- Volumes: Managed by Docker, perfect for database storage

- Bind mounts: Direct filesystem access, great for development

- tmpfs mounts: In-memory storage for temporary data

Troubleshooting Common Docker Issues

The Problems You'll Actually Encounter

After years of Docker usage, certain issues appear repeatedly. Here are the ones I see most often and their solutions:

Port conflicts are common when multiple containers try to use the same port. The solution is simple—map to different host ports:

bash

# Instead of conflicting ports

docker run -p 8080:80 app1

docker run -p 8080:80 app2 # This fails!

# Use different host ports

docker run -p 8080:80 app1

docker run -p 8081:80 app2

Image build failures often stem from incorrect Dockerfile syntax or missing dependencies. Always test your Dockerfile instructions step by step.

Container startup issues usually indicate missing environment variables or incorrect command specifications. Use docker logs container-name to diagnose startup problems.

Performance Optimization in Practice

Container performance optimization focuses on resource usage and startup time. The techniques that have made the biggest impact in my projects:

Resource monitoring helps identify containers consuming excessive CPU or memory:

bash

# Monitor resource usage in real-time

docker stats

# Get detailed container information

docker inspect container-name

Container optimization through proper base image selection and dependency management can dramatically improve performance. Alpine Linux base images, for example, are typically 10-20x smaller than full Ubuntu images.

Your Docker Journey Starts Here

Docker represents more than just a technology—it's a fundamental shift in how we think about application deployment and environment management. The consistency, efficiency, and scalability benefits I've experienced firsthand have transformed not just my technical approach, but entire development workflows.

The journey from Docker novice to containerization expert isn't always smooth, but the payoff is enormous. Start small—containerize a simple application, experiment with Docker Compose for multi-service setups, and gradually incorporate Docker into your development and deployment processes.

Your next steps:

- Install Docker and run your first container today

- Containerize an existing application to see the benefits firsthand

- Experiment with Docker Compose for multi-service applications

- Integrate Docker into your CI/CD pipeline

- Explore orchestration platforms like Kubernetes when you're ready to scale

The containerization revolution is here, and Docker is your entry point into this new world of consistent, scalable, and efficient application deployment.

Frequently Asked Questions

What is Docker and why is it important?

Docker is a containerization platform that packages applications and their dependencies into lightweight, portable containers. It's important because it solves deployment consistency issues, improves resource efficiency, and enables scalable microservices architectures. From my experience, Docker eliminates the "works on my machine" problem that has plagued developers for decades.

How is Docker different from virtual machines?

Docker containers share the host OS kernel, making them more lightweight than VMs. While VMs virtualize entire operating systems, Docker containers only virtualize the application layer, resulting in better performance and resource utilization. In practice, I've seen containers use 80-90% less memory than equivalent VM setups.

Is Docker free to use?

Docker offers both free and paid versions. Docker Community Edition is free for development and small-scale production use. Docker Enterprise provides additional features and support for large organizations. For most developers and small teams, the free version provides everything needed to get started.

What programming languages work with Docker?

Docker supports applications written in any programming language including Python, Java, JavaScript, Go, PHP, Ruby, and .NET. The containerization is language-agnostic since it packages the entire runtime environment. I've successfully containerized applications across dozens of different technologies and frameworks.

How do I install Docker on Windows?

Install Docker Desktop for Windows from the official Docker website. It requires Windows 10/11 Pro or Enterprise with Hyper-V enabled, or Windows 10/11 Home with WSL 2 backend. The installation process is straightforward and includes everything needed to start using Docker immediately.

What are Docker images and containers?

Docker images are read-only templates used to create containers. Containers are running instances of images. Think of images as blueprints and containers as the actual running applications. You can create multiple containers from the same image, each running independently.

Can Docker improve application security?

Yes, Docker improves security through process isolation, resource limiting, and image scanning capabilities. However, proper configuration and security practices are essential for maintaining container security. Using official images, running as non-root users, and regular security updates are crucial practices.

What is a Dockerfile?

A Dockerfile is a text file containing instructions to automatically build Docker images. It defines the base image, application code, dependencies, and configuration needed to create a custom container image. Dockerfiles make image creation reproducible and version-controllable.

How much does Docker reduce deployment time?

Docker can reduce deployment time by 50-90% compared to traditional methods. The exact improvement depends on application complexity and current deployment processes. In my experience, deployments that once took hours now complete in minutes with proper Docker implementation.

Do I need Kubernetes to use Docker?

No, Kubernetes is not required for Docker. While Kubernetes enhances Docker with orchestration capabilities for large-scale deployments, Docker works perfectly for single-host applications and smaller deployments. Many successful applications run on Docker without ever needing Kubernetes.