CASE STUDY

CASE STUDY What is a Test Case? A Complete Guide for QA Engineers with Examples

As a seasoned QA engineer, one of the most frequently asked questions I get from new testers, developers, and even project stakeholders is: “What exactly is a test case, and how do you write one properly?”

Over the years, I’ve written, reviewed, and executed thousands of test cases across multiple domains and tech stacks. Whether you're just starting out in QA or refining your testing process, understanding how to design and write solid test cases is absolutely fundamental to delivering quality software. In this article, I’ll walk you through everything I’ve learned—from the definition to real-world examples, writing techniques, tools, and best practices.

What is a Test Case?

A test case is a documented set of conditions or variables under which a tester will determine whether an application, software system, or one of its features is working as expected.

In simpler terms, it’s a step-by-step guide that tells a tester exactly how to check a certain part of the application, what to input, what to expect as output, and how to determine if it passed or failed.

When I began my QA career, I used to think test cases were just a formality. Over time, I realized they are the backbone of any reliable QA process. They help reduce ambiguity, ensure full test coverage, and provide traceability and accountability for every test that’s run.

Key Components of a Test Case

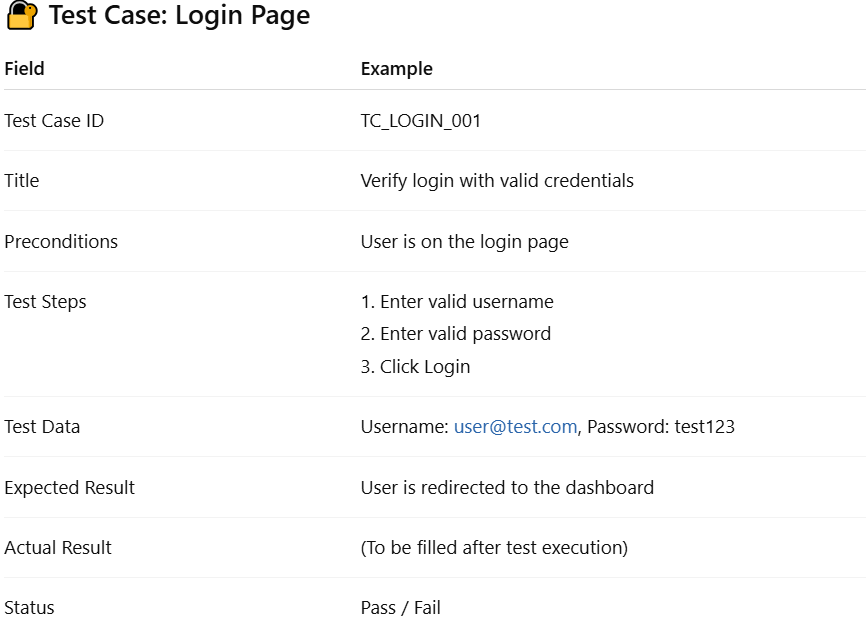

Here’s a breakdown of the key components that make up a well-written test case. These elements help create clarity, consistency, and effectiveness in your testing process.

1. Test Case ID

A unique identifier. It helps reference and organize test cases easily (e.g., TC_001).

2. Title / Test Case Name

A concise summary of what the test case is about (e.g., “Verify login with valid credentials”).

3. Preconditions

Conditions that must be met before executing the test. This could include user being logged in, certain data being present, or system being in a specific state.

4. Test Steps

Sequential actions the tester must perform. They must be detailed and easy to follow.

5. Test Data

Inputs required to perform the test, like usernames, passwords, or file uploads.

6. Expected Result

What should happen if the application works correctly.

7. Actual Result

What actually happened during the test.

8. Status

Pass or Fail. Based on comparison between expected and actual results.

9. Priority / Severity

Helps determine how important this test case is and the impact of failure.

10. Comments / Notes

Any additional information that helps clarify the scenario or highlights unusual behavior.

Types of Test Cases

There are different types of test cases based on the objective, scope, and system behavior being validated. Understanding them helps me choose the right type for each test cycle.

1. Functional Test Cases

These verify specific functions of the software. For example, clicking the login button logs in the user.

2. Non-Functional Test Cases

These test aspects like performance, scalability, or usability.

3. UI Test Cases

Focused on checking the user interface, including element placement, responsiveness, and design.

4. Integration Test Cases

Test how multiple modules interact with each other.

5. Regression Test Cases

Ensure that new code changes haven’t broken existing functionality.

6. Smoke and Sanity Test Cases

Quick, high-level test cases to check critical functionality.

7. Negative Test Cases

Verify how the system handles invalid inputs or error scenarios.

In my day-to-day work, I often combine several of these types depending on the application complexity and the sprint goals.

How to Write a Good Test Case: Step-by-Step

When I train junior QA engineers, I emphasize one golden rule: test cases should be written so clearly that anyone can pick them up and execute them without further guidance.

Here’s the step-by-step process I use:

Step 1: Understand Requirements

Before writing a test case, I thoroughly review the user stories, requirements, and design documents. I highlight edge cases and ambiguities.

Step 2: Define Test Scenarios

Break down the functionality into scenarios that need to be validated. For instance, for a login feature, I’ll consider valid login, invalid login, password visibility toggle, and so on.

Step 3: Write Test Steps

Clearly list every step to perform. Avoid skipping “obvious” actions—what’s obvious to me may not be to someone else.

Step 4: Define Inputs and Expected Output

Be specific with test data and outline exactly what the system should do in response.

Step 5: Review and Optimize

Before finalizing, I walk through the test case mentally or with a colleague to find gaps.

Best Practices:

- Use clear, simple language

- Keep it atomic (one test per case)

- Reuse where possible

- Avoid assumptions

Example Test Cases for Real-World Applications

🛒 Test Case: E-Commerce Checkout

| Test Case ID | TC_CHECKOUT_005 |

| Title | Verify checkout with credit card |

| Preconditions | Items in cart, user logged in |

| Test Steps | 1. Click on cart<br>2. Proceed to checkout<br>3. Select credit card<br>4. Enter details<br>5. Confirm payment |

| Test Data | Card: 4111 1111 1111 1111, Exp: 12/26 |

| Expected Result | Payment is successful and order confirmed |

| Status | Pass / Fail |

These are simplified examples, but they mirror the kinds of test cases I use every day in real projects.

Test Case vs Test Scenario vs Test Script

Many QA beginners confuse these terms, but understanding the difference is key to test planning.

- Test Case: A detailed step-by-step document validating a specific functionality.

- Test Scenario: A high-level idea of what to test. For example, “Test login functionality.”

- Test Script: Typically used in automated testing, it’s code that performs the test steps.

In practice, I start with scenarios, write manual test cases, and then automate them into scripts where applicable.

Tools for Writing and Managing Test Cases

Over time, I’ve used a wide variety of tools depending on the team size and budget. Here are some tools that have stood out in my experience:

- Excel / Google Sheets: Great for small teams or early stages.

- TestRail: Very feature-rich and organized for larger teams.

- JIRA + Zephyr/Xray: Convenient if you’re already using JIRA.

- TestLink: Open-source option for structured test case management.

- qTest / PractiTest: Enterprise-level solutions with robust integrations.

Each has its pros and cons. The choice depends on your team’s workflow and complexity.

Test Case Management in Agile & DevOps

In Agile environments, where requirements evolve rapidly, writing and managing test cases becomes even more dynamic. I’ve found it best to:

- Write lean, flexible test cases

- Collaborate closely with developers and product managers

- Use BDD (Behavior Driven Development) where applicable

- Continuously update cases with sprint changes

With DevOps and CI/CD, it’s essential that test cases—especially automated ones—are integrated into pipelines. I always ensure test cases are version-controlled and modular to accommodate frequent releases.

Manual vs Automated Test Cases

Manual Test Cases:

These are executed by humans. They’re best when:

- Testing new or evolving features

- Validating complex UI interactions

- Performing exploratory testing

Automated Test Cases:

These are executed by scripts. I automate test cases that:

- Are repetitive

- Have stable UI or logic

- Need to run across environments frequently

Tools like Selenium, Cypress, Playwright, or TestCafe have been part of my toolbox. However, not everything should be automated. I follow a hybrid strategy based on ROI and risk.

Common Challenges and How to Overcome Them

Writing test cases isn’t always smooth sailing. I’ve faced several challenges over the years:

1. Incomplete Requirements

When specs are unclear, I reach out early and ask clarifying questions.

2. Tight Deadlines

I prioritize high-risk areas and create high-level test cases quickly, refining them post-release.

3. Redundancy

I regularly audit and merge duplicate test cases to avoid maintenance headaches.

4. Outdated Test Cases

With every release, I update test cases to reflect the current state of the product.

Being proactive and maintaining open communication with the team has helped me overcome most issues.

Frequently Asked Questions (FAQ)

Q1: What is the difference between a test case and a test scenario?

A test scenario is a high-level idea, while a test case includes all details needed to validate that scenario.

Q2: Can I write test cases without a requirements document?

Yes, especially in Agile. I rely on user stories, developer discussions, and UI mockups to draft initial test cases.

Q3: How detailed should a test case be?

Detailed enough that someone unfamiliar with the feature can execute it. Over-detailing can make maintenance harder though.

Q4: What is a test case design technique?

These are systematic approaches like boundary value analysis, equivalence partitioning, decision tables, etc., that help improve test coverage.

Q5: How many test cases should I write per feature?

There’s no fixed number. I focus on covering all functional paths, edge cases, and critical negative scenarios.

Conclusion

Test cases might seem tedious to write at first, but they are your strongest weapon in ensuring software quality. They bring clarity to the testing process, minimize the risk of human error, and act as documentation for future releases.

From login forms to complex e-commerce flows, test cases have helped me prevent bugs, meet deadlines, and build trust with stakeholders. Whether you're managing a team or testing solo, investing time in writing quality test cases will always pay off.