CASE STUDY

CASE STUDY QA Automation: The Future of Software Testing

Chapter 1: Introduction to QA Automation

1. Definition of Quality Assurance (QA) Automation

Quality Assurance (QA) Automation refers to the use of software tools, scripts, and frameworks to execute pre-defined test cases automatically. It ensures that software applications function as expected by running repetitive tests without human intervention. QA automation is a critical aspect of modern software development, improving test efficiency, accuracy, and coverage.

Example:

A company developing an e-commerce website can use Selenium (a popular automation testing tool) to automate login functionality tests, ensuring that users can log in successfully with valid credentials across different browsers.

2. Importance of QA Automation in Software Development

QA automation plays a crucial role in enhancing software quality, reducing human error, and speeding up the development process. It is particularly essential for continuous integration and deployment (CI/CD), where frequent testing is required to ensure stability.

Example:

A fintech company launching a mobile banking app needs to perform regression testing every time a new feature is added. Automation frameworks like Appium (for mobile apps) help run test cases on multiple devices simultaneously, reducing manual testing time and ensuring a smooth user experience.

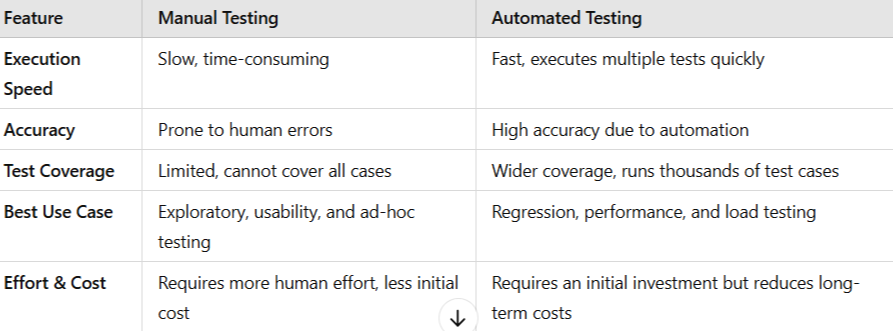

3. Differences Between Manual and Automated Testing

Example:

Example:

A gaming company developing a new mobile game may initially use manual testing to check user experience (UX), but for repetitive regression tests on different devices, they use automated testing with TestComplete or JUnit.

4. Benefits of Automated Testing

Automated testing offers several advantages over manual testing:

a. Faster Execution and Time Efficiency

Automated tests can run 24/7 without human intervention, saving significant time compared to manual testing.

Example: Running a full suite of regression tests manually on a large web application may take weeks, whereas automation with Selenium Grid can reduce this to a few hours.

b. Improved Test Accuracy

Eliminates human error by executing the same test steps consistently.

Example: Automating a login test script prevents missing validation checks that a manual tester might overlook.

c. Cost Savings in the Long Run

Although initial setup costs exist, automation reduces labor costs and ensures faster releases.

Example: A healthcare software company investing in automation via TestNG significantly reduces QA team workload, allowing faster compliance testing.

d. Increased Test Coverage

Automation allows the execution of thousands of test cases across multiple environments.

Example: A cloud-based SaaS company uses AWS Device Farm to run automated tests on hundreds of real devices simultaneously.

e. Reusability of Test Scripts

Once created, automated scripts can be reused across multiple projects.

Example: A retail company uses the same automated test suite for both its website and mobile app, saving development effort.

5. Common Misconceptions About QA Automation

a. "Automation Replaces Manual Testing"

Reality: Automated testing complements manual testing but does not replace it. Human testers are essential for exploratory, usability, and ad-hoc testing.

Example: While automation can test login functionality, usability issues like poor button placement still require manual review.

b. "Automation Is Always Expensive"

Reality: Although initial costs exist, automation reduces costs in the long term.

Example: Open-source tools like Selenium, JUnit, and Cypress provide cost-effective automation solutions.

c. "Automation Delivers Immediate ROI"

Reality: ROI depends on test complexity and execution frequency. Initial time investment in script creation is necessary.

Example: A startup implementing automation may take 3–6 months to see full benefits.

d. "Automated Tests Are 100% Bug-Free"

Reality: Automated tests only check predefined scenarios and may miss new bugs.

Example: A flight booking website’s automation script might validate seat selection but fail to detect hidden UI glitches.

e. "Any Tester Can Automate Tests Without Training"

Reality: Automation requires programming skills in languages like Java, Python, or JavaScript.

Example: Testers using Selenium need to understand XPath, CSS selectors, and Java/Python scripting.

Chapter 2: Understanding Automation Testing Frameworks

1. Definition and Purpose of Testing Frameworks

A testing framework is a set of guidelines, best practices, and tools used to create and execute automated tests efficiently. It provides a structured approach to testing by standardizing test script creation, execution, and reporting.

Purpose of Testing Frameworks:

- Improves test efficiency by reducing redundancy

- Ensures reusability of test scripts

- Increases maintainability and scalability of test cases

- Provides detailed reporting and logging

- Enhances collaboration among development and testing teams

Example:

A banking application undergoes frequent updates. A well-structured Selenium-based testing framework helps automate regression testing, ensuring that existing features continue to work correctly after updates.

2. Types of Testing Frameworks

A. Linear Scripting Framework

The Linear Scripting Framework follows a record-and-playback approach, where testers manually record test steps and execute them sequentially. It is simple but lacks flexibility and reusability.

Characteristics:

- No modularity—each test script is independent

- Hardcoded test data

- Best suited for small projects and one-time tests

Example:

A startup developing a basic website records a test script using Selenium IDE to validate login functionality. The test script follows a sequential flow without parameterization or modularity.

B. Modular Testing Framework

In a Modular Framework, test cases are divided into independent modules, making them reusable and easier to maintain.

Characteristics:

- Divides application functionality into smaller, reusable test scripts

- Reduces duplication of test scripts

- Enhances maintainability and scalability

Example:

A travel booking application has separate modules for login, flight booking, payment, and logout. Each module has reusable test scripts that can be combined to create different test scenarios.

C. Data-Driven Testing Framework

A Data-Driven Framework separates test scripts from test data, allowing the same test script to run with multiple sets of data. Test data is stored in external files such as Excel, CSV, or databases.

Characteristics:

- Uses external data sources (Excel, JSON, CSV, databases)

- Reduces redundancy by executing the same script with different inputs

- Ideal for applications requiring multiple test cases with varying inputs

Example:

A banking application tests user login with 100 different username-password combinations by reading data from an Excel file and running the same test script multiple times.

Tools: Apache POI (for Excel in Java), Pandas (for CSV in Python), Selenium WebDriver with TestNG (for data parameterization).

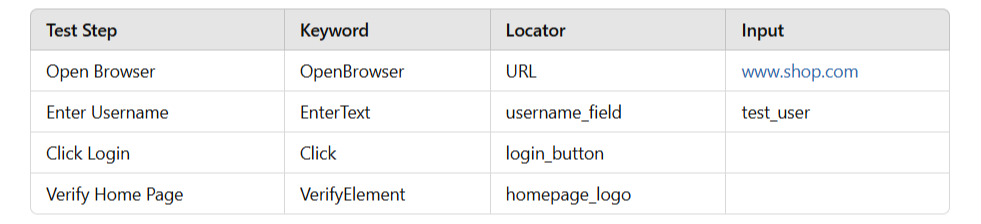

D. Keyword-Driven Testing Framework

A Keyword-Driven Framework uses keywords (predefined actions) to execute test steps. Testers define keywords in an external file (Excel, XML, JSON), making it easy to automate tests without deep programming knowledge.

Characteristics:

- Uses keywords (e.g., Click, EnterText, VerifyElement) to define actions

- Reduces dependency on programming skills

- Increases test case reusability and flexibility

Example:

A retail company automates checkout functionality by defining keywords like:

Tools: UFT (Unified Functional Testing), Selenium with Apache POI, Robot Framework

E. Hybrid Testing Framework

A Hybrid Framework combines two or more frameworks (e.g., Data-Driven + Keyword-Driven) to leverage the best features of each approach.

Characteristics:

- Provides flexibility by combining multiple frameworks

- Balances maintainability, scalability, and efficiency

- Suitable for complex and enterprise-level applications

Example:

A healthcare management system requires extensive data-driven testing (patient records) and keyword-driven testing (automated test steps). A hybrid framework using Selenium, TestNG, and Apache POI allows smooth execution of both approaches.

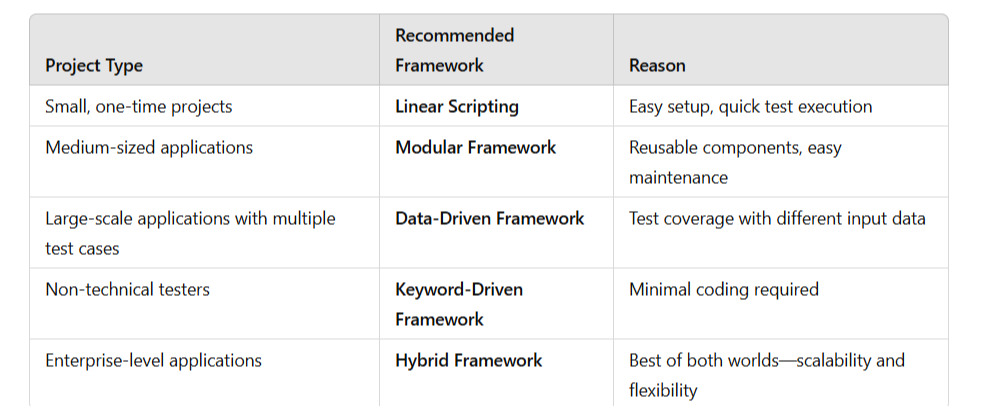

3. Choosing the Right Framework Based on Project Requirements

Example Scenarios:

Example Scenarios:

- A social media platform chooses a Data-Driven Framework to test multiple user credentials.

- An AI-powered chatbot uses a Keyword-Driven Framework so non-technical testers can automate conversation flow.

- Logistics software adopts a Hybrid Framework combining Data-Driven + Modular to manage complex test scenarios.

Chapter 3: Tools for Quality Assurance(QA) Automation

1. Criteria for Selecting Automation Tools

Choosing the right QA automation tool depends on multiple factors:

a. Project Requirements

- Web, mobile, API, or performance testing?

- Support for the required programming language?

- Integration with CI/CD pipelines?

🔹 Example: A company developing a web-based e-commerce platform chooses Selenium for browser automation.

b. Ease of Use

- Is the tool user-friendly?

- Does it require extensive coding knowledge?

🔹 Example: Cypress is easier for frontend developers since it uses JavaScript and has built-in debugging tools.

c. Cross-Browser & Cross-Platform Support

- Can the tool test across Chrome, Firefox, Edge, and Safari?

- Can it run tests on Windows, Mac, and Linux?

🔹 Example: Playwright supports multiple browsers, including WebKit (Safari).

d. Integration with DevOps & CI/CD

- Can it be integrated with Jenkins, GitHub Actions, or Azure DevOps?

- Does it support parallel execution?

🔹 Example: TestNG allows parallel execution, making it suitable for CI/CD pipelines.

e. Cost (Open-Source vs. Commercial)

- Is it free or requires a license?

- Does it offer enterprise support?

🔹 Example: Selenium is free, while UFT (Unified Functional Testing) is a paid tool with advanced features.

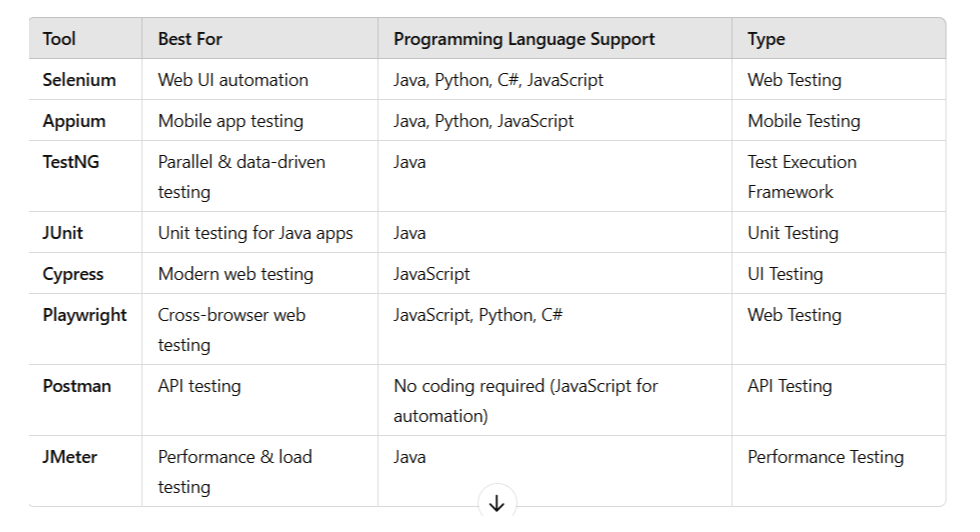

2. Popular QA Automation Tools

A. Selenium (Web Automation Testing)

Selenium is an open-source automation tool used for web application testing. It supports multiple browsers and programming languages like Java, Python, and JavaScript.

🔹 Example:

A banking website needs automated login testing. Using Selenium WebDriver, testers write scripts in Java to validate login credentials across Chrome, Firefox, and Edge.

Best Use Cases:

✔️ Cross-browser testing

✔️ Regression testing for web applications

✔️ Integrating with TestNG for parallel execution

B. Appium (Mobile App Automation Testing)

Appium is an open-source automation tool for iOS and Android applications. It allows cross-platform testing using a single test script.

🔹 Example:

A fintech company automates its mobile banking app using Appium to ensure login, money transfers, and notifications work on both iOS and Android.

Best Use Cases:

✔️ Mobile application testing (Android/iOS)

✔️ Cross-platform mobile testing

✔️ Works with Selenium WebDriver

C. TestNG (Test Execution Framework)

TestNG (Test Next Generation) is a testing framework inspired by JUnit but with additional functionalities like parallel execution, test grouping, and data-driven testing.

🔹 Example:

An airline reservation system automates booking, cancellation, and payment tests using TestNG with Selenium to run tests in parallel, reducing execution time.

Best Use Cases:

✔️ Large-scale automation projects

✔️ Parallel execution of test cases

✔️ Data-driven testing with Excel

D. JUnit (Unit Testing Framework for Java)

JUnit is a unit testing framework for Java applications. It is mainly used for testing individual components of software at the code level.

🔹 Example:

A developer working on an online payment API writes JUnit tests to check if the payment gateway returns correct transaction statuses.

Best Use Cases:

✔️ Java unit testing

✔️ Test-driven development (TDD)

✔️ Works with Selenium for web automation

E. Cypress (Frontend Web Testing)

Cypress is a modern JavaScript-based end-to-end testing framework, designed for frontend web testing. It runs directly in the browser, making tests faster and more reliable.

🔹 Example:

A SaaS company automates UI testing for a React dashboard using Cypress, ensuring that buttons, forms, and API calls work as expected.

Best Use Cases:

✔️ UI testing for modern web applications

✔️ Testing single-page applications (SPAs)

✔️ Frontend developers who prefer JavaScript

F. Playwright (Cross-Browser Web Testing)

Playwright, developed by Microsoft, is a powerful automation tool for web applications that supports multiple browsers, including Chromium, Firefox, and WebKit.

🔹 Example:

A content management platform uses Playwright to test editor functionalities across Safari, Edge, and Chrome.

Best Use Cases:

✔️ Cross-browser testing (including Safari)

✔️ Headless browser testing

✔️ Handling complex UI elements like pop-ups and authentication

G. Postman (API Testing)

Postman is a popular tool for API automation testing, allowing users to send HTTP requests and validate API responses.

🔹 Example:

An e-commerce application integrates payment APIs from Stripe and PayPal. Testers use Postman to verify whether the API returns correct responses for different payment methods.

Best Use Cases:

✔️ REST API and GraphQL testing

✔️ Automated API test execution with Postman Collections

✔️ Integration testing between frontend and backend

H. JMeter (Performance & Load Testing)

JMeter is an open-source tool used for performance, load, and stress testing of web applications.

🔹 Example:

An online learning platform expects 10,000 users during peak hours. Using JMeter, testers simulate multiple users accessing the site to measure response times and detect performance bottlenecks.

Best Use Cases:

✔️ Load and stress testing

✔️ Performance monitoring for web applications

✔️ API performance benchmarking

3. Tool Comparisons and Best Use Cases

Example Scenarios:

- A banking app chooses Appium for mobile testing and Postman for API testing.

- A React-based dashboard uses Cypress for UI testing.

- An online game platform performs load testing with JMeter to handle thousands of players.

Chapter 4: Test Automation Strategy and Planning

4.1 When to Automate Tests

Automating tests can save time and improve accuracy, but it is essential to determine the right time for automation.

Scenarios Where Automation is Beneficial

- Frequent Regression Testing – Automating repetitive test cases ensures stability after every code change.

- Example: A banking app undergoes monthly security updates. Automating login functionality testing ensures credentials validation remains intact.

- Data-Driven Testing – Automation is useful for running tests with multiple data inputs.

- Example: An e-commerce site must test checkout functionality using different payment methods and customer addresses.

- Load and Performance Testing – Manually simulating thousands of users is impractical.

- Example: An airline booking system must handle thousands of concurrent users during peak seasons.

- Complex Business Logic – Automating rule-based processes ensures consistency.

- Example: A hospital management system must verify insurance claim calculations accurately every time.

When Not to Automate Tests

- Exploratory Testing – Requires human judgment and creativity.

- Short-Term Projects – Automation setup may not be cost-effective.

- UI Changes Frequently – High maintenance cost for automation scripts.

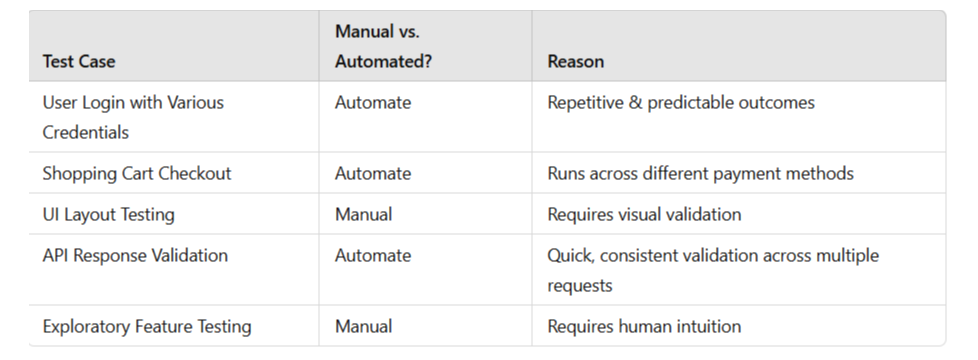

4.2 Identifying Test Cases for Automation

Not all test cases should be automated. The right selection can improve efficiency and reduce costs.

Criteria for Selecting Test Cases

- High Reusability – Test cases executed across multiple releases.

- High Risk – Features critical to business functionality.

- Stable Features – Parts of the application that do not frequently change.

- Time-Consuming Manual Execution – Tests that take a long time when done manually.

- Objective Validation – Tests with clear expected outcomes.

Examples of Suitable Test Cases

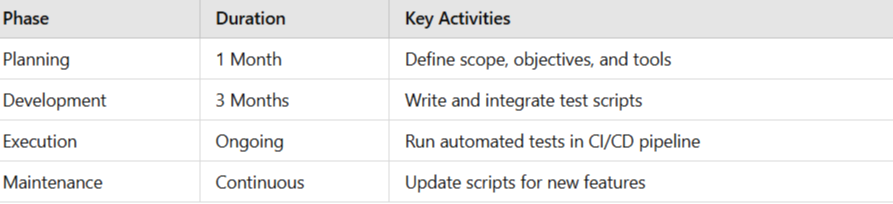

4.3 Setting Up an Automation Roadmap

An automation roadmap defines the approach, milestones, and implementation phases.

Steps to Develop an Automation Roadmap

- Define Objectives – Identify business and technical goals.

- Example: Reduce regression testing time by 50%.

- Select Tools and Frameworks – Choose the right tools based on project needs.

- Example: Selenium for UI testing, JMeter for load testing.

- Prioritize Test Cases – Focus on high-value automation areas first.

- Build a Skilled Team – Train or hire automation experts.

- Develop and Integrate Automation Scripts – Ensure CI/CD compatibility.

- Monitor and Maintain – Regularly update scripts to adapt to changes.

Example Roadmap Timeline

4.4 Creating a Test Automation Strategy

A well-defined strategy ensures long-term automation success.

Key Components of a Test Automation Strategy

- Scope and Goals – Define what will be automated and expected benefits.

- Example: Reduce test cycle time from 5 days to 1 day.

- Test Environment Setup – Define hardware, software, and data requirements.

- Tool Selection – Choose tools based on compatibility and ease of integration.

- Example: Playwright for modern web automation.

- Test Execution Plan – Schedule and automate test runs in CI/CD pipelines.

- Example: Nightly execution of automated regression tests.

- Reporting and Metrics – Track test coverage, pass/fail rates, and execution time.

Example Strategy for a Web Application

- Scope: Automate login, checkout, and payment workflows.

- Tools: Selenium with Python, Jenkins for CI/CD.

- Execution: Run tests on Chrome, Firefox, and Edge nightly.

- Maintenance: Update scripts biweekly based on feature changes.

4.5 Estimating Costs and ROI for Test Automation

Automation requires an initial investment, but long-term benefits can outweigh costs.

Cost Factors

- Tool Licensing Costs – Paid tools like TestComplete vs. open-source tools like Selenium.

- Infrastructure Costs – Cloud-based execution vs. on-premise setup.

- Development Costs – Hiring and training automation engineers.

- Maintenance Costs – Updating scripts when features change.

Chapter 5: Test Case Design and Implementation

5.1 Principles of Effective Test Case Design

The foundation of automated testing lies in designing test cases that are clear, reusable, and cover various scenarios. Effective test case design helps ensure that the tests run reliably and give useful feedback.

Key Principles:

1. Clarity and Simplicity

- Description: Test cases should be easy to understand by anyone, including developers, testers, and stakeholders. Avoid using ambiguous language, and ensure that each test case serves a specific purpose.

- Example:

- Instead of a vague test case like "Verify login", specify:

- "Enter valid username and password, click login button, and verify that the user is redirected to the dashboard page."

2. Atomicity

- Description: A test case should focus on a single feature or behavior. Atomic test cases help in pinpointing issues and ensure easier maintenance.

- Example:

- If you're testing the login functionality, the test should check only the login aspect (username/password validation), not the entire user flow.

3. Test Coverage

- Description: Ensure that test cases cover all important scenarios, including boundary cases and error conditions. This includes testing both valid and invalid inputs.

- Example:

- For a password field:

- Test with valid passwords.

- Test with empty passwords.

- Test with passwords that exceed the maximum length.

- Test with special characters or spaces.

4. Reusability

- Description: Design test cases so they can be reused across different test scenarios and future releases. This saves time and resources.

- Example:

- Create a common login function that can be reused across multiple tests (e.g., testing user roles, session timeouts, etc.).

5. Independence

- Description: Each test case should be independent, meaning it does not depend on the outcome or execution of other tests.

- Example:

- If a test case for user registration fails, it should not affect the execution of the login test case.

5.2 Writing Maintainable and Scalable Automated Tests

Automating tests is not just about writing scripts, but writing scripts that are maintainable and scalable over time as the application evolves.

Best Practices for Maintainability:

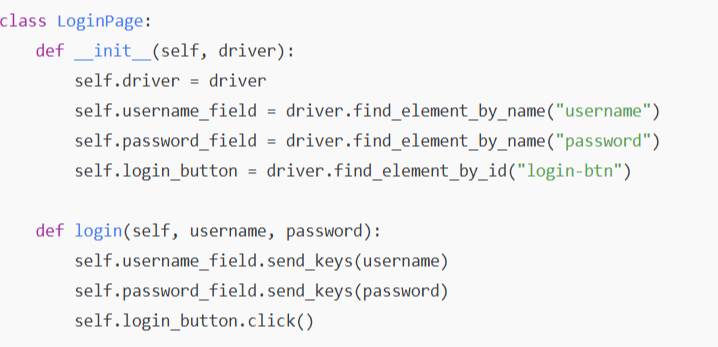

1. Use Design Patterns (Page Object Model - POM)

- Description: Use design patterns to separate test logic from the user interface details. The Page Object Model (POM) is a popular design pattern that helps in this separation, making it easier to maintain tests when UI changes occur.

- Example:

- If the login page's layout changes, only the LoginPage object needs to be updated, not the entire test suite.

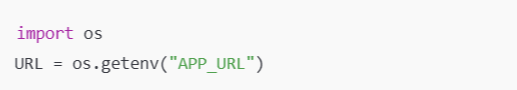

2. Avoid Hard-Coding Values

- Description: Hardcoding test data and locators can make the tests difficult to maintain. Use configuration files, data files, or environment variables to make tests flexible.

- Example:

- Instead of hardcoding the URL and credentials, use an external configuration file or environment variables:

3. Use Assertions Effectively

- Description: Ensure your assertions are clear and check for the expected behavior of the application under test.

- Example:

- Instead of checking that the page title contains a keyword, ensure that the exact title is correct.

assert driver.title == "User Dashboard", "Title mismatch"

4. Modularize Tests

- Description: Break large test scripts into reusable and independent functions. This keeps tests small, focused, and easier to maintain.

- Example:

- If you need to test the same login functionality across different test cases, create a reusable login function:

def login(driver, username, password):

login_page = LoginPage(driver)

login_page.login(username, password)

5. Keep Tests DRY (Don’t Repeat Yourself)

- Description: Avoid repeating the same test code in multiple places. Instead, create helper functions or libraries that can be reused.

- Example:

- If multiple test cases need to verify the presence of an element, create a helper function:

def element_present(driver, by, locator):

try:

driver.find_element(by, locator)

return True

except NoSuchElementException:

return False

5.3 Best Practices for Scripting Test Cases

Writing automated test scripts requires a consistent approach to ensure quality, efficiency, and future-proofing.

Best Practices:

1. Consistency in Code Style

- Description: Maintain a consistent coding style across all test scripts. This makes the tests easier to read, understand, and maintain.

- Example:

- Stick to one naming convention (e.g., snake_case for Python variables and methods).

2. Use of Comments and Documentation

- Description: Write clear comments to explain the test flow, especially when the code is complex or involves tricky logic.

- Example:

# Navigate to the login page

login_page = LoginPage(driver)

login_page.login("user", "password")

3. Optimize Test Execution Time

- Description: Ensure that your test scripts run as efficiently as possible to minimize wait time.

- Example:

- Avoid unnecessary waits and use implicit or explicit waits only when needed to avoid delays in the execution of the tests.

Chapter 6: Challenges and Solutions in Test Automation

Automated testing can greatly improve efficiency, but it also presents unique challenges that need to be addressed for successful implementation. This chapter dives into common problems in QA automation and offers strategies for overcoming them.

6.1 Common Challenges in QA Automation

Automated testing is not without its challenges. Identifying these challenges early on allows for better planning and more effective solutions.

Key Challenges:

1. Initial Setup and Configuration

- Description: Setting up the automation framework, configuring tools, and establishing environments for testing can be time-consuming and complex, especially when integrating with other systems (CI/CD, version control, etc.).

- Solution:

- To tackle this, you can:

- Use pre-configured test environments (e.g., Docker containers).

- Document setup steps clearly.

- Use cloud-based test platforms to avoid managing infrastructure.

2. Test Maintenance

- Description: Automated tests require constant updates to adapt to changes in the application, such as UI modifications, new features, or new workflows.

- Solution:

- Follow modular test design (e.g., Page Object Model).

- Implement a test suite that prioritizes critical tests, leaving less important tests for periodic updates.

- Use version control for test scripts to track and roll back changes efficiently.

3. Complex Test Scenarios

- Description: Some features or workflows may be too complex to automate easily, especially if they involve intricate user interactions or require significant setup.

- Solution:

- Break complex scenarios into smaller, manageable components and automate in steps. This modular approach helps to isolate and simplify the automation process.

6.2 Dealing with Flaky Tests and False Positives

Flaky tests are tests that yield inconsistent results, either passing or failing intermittently. False positives occur when tests incorrectly report success.

Solutions for Flaky Tests:

1. Causes of Flaky Tests:

- Synchronization Issues: The test may proceed before elements are fully loaded.

- Network Latency: Sometimes, external systems or APIs may cause delays.

- Timing Issues: Tests that depend on timing (like waiting for elements to appear) may fail unpredictably.

2. Strategies to Mitigate Flakiness:

- Explicit Waits: Use WebDriverWait to ensure that elements are ready before interacting with them.

- Example:

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.ID, "submit")))

- Retries: Use retry mechanisms for unstable tests to automatically re-run them if they fail.

- Example:

- Using pytest’s pytest-rerunfailures plugin:

pytest --reruns 3

- Stabilize the Test Environment: Ensure that the test environment is stable, using dedicated test servers or using virtualization technologies (like Docker) for consistent test environments.

- Test Parallelization: Running tests in parallel may uncover issues caused by other tests or tests running sequentially.

- Randomization in Tests: When dealing with dynamic data, randomize certain inputs to catch edge cases and avoid fixed assumptions that can lead to flakiness.

6.3 Handling UI Changes and Test Maintenance

UI changes are common in software development, and they can cause significant disruptions in automated tests, especially if tests are tightly coupled with specific UI elements.

Key Challenges:

- Frequent UI Updates: Minor UI changes, like relocating buttons or changing labels, can break the tests.

- Handling Dynamic Elements: Applications that dynamically generate or alter elements (e.g., through JavaScript or AJAX) can make it difficult to write stable tests.

Solutions:

1. Use Stable Locators:

Avoid relying on brittle locators like class names or element indices, which are prone to change. Instead, use more reliable attributes such as:

- Unique ID or data-* attributes.

- XPath or CSS selectors with precise conditions.

- aria-label or other accessibility labels that are less likely to change.

- Example:

driver.find_element_by_css_selector("[data-test='submit']")

2. Page Object Model (POM):

Create a Page Object Model (POM) to centralize all UI element locators and reduce test maintenance. If the UI changes, only the page object needs to be updated.

- Example:

class LoginPage:

def __init__(self, driver):

self.driver = driver

self.username_input = driver.find_element_by_id("username")

self.password_input = driver.find_element_by_id("password")

def login(self, username, password):

self.username_input.send_keys(username)

self.password_input.send_keys(password)

3. Use Waits for Dynamic Content:

Dynamically loaded content can sometimes cause synchronization problems. Explicit waits help address these problems.

- Example:

WebDriverWait(driver, 10).until(EC.element_to_be_clickable((By.XPATH, "//button[@id='submit']")))

4. Modular Testing:

Divide complex workflows into smaller, reusable steps. This helps avoid rewriting entire test suites whenever a UI change happens.

6.4 Strategies for Reducing Test Execution Time

Long test execution times can impact the feedback loop, making it difficult to get quick insights. Optimizing test execution speed is crucial for maintaining an efficient CI/CD pipeline.

Key Strategies:

1. Parallel Test Execution

Running tests in parallel can dramatically reduce the total test time. You can use tools like Selenium Grid or cloud-based platforms such as Sauce Labs or BrowserStack to run tests on multiple browsers or devices simultaneously.

- Example:

- Use pytest with the pytest-xdist plugin to run tests concurrently:

pytest -n 4 # Run tests on 4 parallel processes

2. Selective Testing

- Run Only the Necessary Tests: Instead of running the full suite every time, run a subset of tests based on the changes made (e.g., test affected modules).

- Test Prioritization: Prioritize tests based on their business impact or risk level.

- Use tagging or metadata to identify critical tests and execute them more frequently.

3. Use Lightweight Tools Use lighter frameworks and tools for tests that don’t require extensive setup, like API tests or unit tests, which run faster compared to UI-based tests.

4. Test Data Optimization

- Use Mocks and Stubs: For tests that require external dependencies (e.g., APIs, databases), use mock data or stubs to simulate the responses. This avoids waiting for real external resources to respond.

- Mocking Example:

from unittest.mock import patch

with patch('module.function_name') as mock_func:

mock_func.return_value = 'Mocked Response'

5. Avoid UI-Heavy Tests Where Possible

UI-based tests tend to be slower due to the time it takes for elements to load. Where possible, focus on unit or API tests for faster feedback.

6.5 Ensuring Cross-Browser and Cross-Platform Compatibility

In modern software development, applications need to be tested across different browsers and platforms to ensure consistent behavior.

Challenges:

- Different browsers (Chrome, Firefox, Edge, Safari) can render the same UI differently.

- Mobile devices (iOS, Android) may exhibit different behaviors due to different rendering engines, screen sizes, and user interactions.

Solutions:

1. Cross-Browser Testing Tools

- Use cross-browser testing tools like Selenium Grid, Sauce Labs, or BrowserStack to ensure compatibility across different browsers and devices.

- Example: Run tests across multiple browsers in parallel using Selenium Grid:

bash

CopyEdit

selenium-server-standalone -role hub

2. Automated Browser-Specific Testing

- Ensure that tests cover browser-specific quirks (e.g., CSS rendering differences or JavaScript engine discrepancies).

- Use browser-specific CSS or JavaScript workarounds if necessary.

3. Device and Mobile Testing

- For mobile devices, use tools like Appium, Selenium WebDriver for mobile, or cloud platforms like Sauce Labs for testing across real mobile devices.

- Example:

python

CopyEdit

desired_caps = {

'platformName': 'Android',

'deviceName': 'Samsung Galaxy S21',

'app': '/path/to/app.apk'

}

driver = webdriver.Remote('http://localhost:4723/wd/hub', desired_caps)

4. Responsive Design Testing

- For web applications, ensure that your application’s responsive design works across different screen sizes. Tools like Selenium WebDriver or Puppeteer allow you to simulate different screen sizes.

- Example:

python

CopyEdit

driver.set_window_size(375, 667) # Mobile viewport

5. Automating with Headless Browsers

- Headless Mode allows tests to be run without launching a browser GUI, speeding up execution. It's particularly useful for browser compatibility checks.

- Example:

options = webdriver.ChromeOptions()

options.add_argument('--headless')

driver = webdriver.Chrome(options=options)

Chapter 7: Future Trends in QA Automation

The landscape of QA automation is evolving rapidly, with new methodologies, tools, and platforms continually emerging. In this chapter, we’ll explore some of the most significant trends and their implications for QA professionals.

7.1 The Rise of No-Code and Low-Code Test Automation

No-code and low-code platforms have gained considerable attention in recent years. These tools allow testers and even non-technical users to automate tests without needing to write complex code.

Key Features:

- No-Code Tools: Provide a drag-and-drop interface to create tests without writing any code. These tools often come with pre-built templates for common test scenarios.

- Example: Testim.io, Katalon Studio.

- Low-Code Tools: Offer visual interfaces and require minimal code writing. Users may write small snippets of code or configure complex logic using visual workflows.

- Example: Cypress, Appium Studio.

Benefits:

- Faster adoption of automation in teams with fewer technical resources.

- Empowering business users and testers to contribute to automation.

- Reducing the bottleneck of manual test creation and allowing for rapid testing iterations.

Challenges:

- Limited flexibility for complex testing scenarios.

- Risk of low-quality test scripts due to a lack of coding knowledge.

7.2 Shift-Left and Shift-Right Testing Approaches

The terms Shift-Left and Shift-Right refer to changes in when and where testing activities occur during the software development lifecycle (SDLC).

Shift-Left Testing:

This approach emphasizes testing earlier in the development process—before writing the actual code. The goal is to detect issues as early as possible, reducing the cost and time required to fix them.

· Benefits:

- Early detection of defects.

- Faster feedback and improved collaboration between developers and QA teams.

- Reduced cost of fixing bugs when caught early.

· Example: Automated unit tests, static code analysis, and integration tests conducted during the coding phase.

Shift-Right Testing:

Shift-Right involves testing in production or post-release. This approach allows teams to gather real user feedback and behavior, improving the software’s quality in live environments.

· Benefits:

- Real-time performance monitoring.

- Continuous testing in production.

- Collecting data to drive further improvements based on actual user experiences.

· Example: Canary releases, A/B testing, and automated monitoring of application performance in production environments.

7.3 Cloud-Based Test Automation Platforms

Cloud-based test automation platforms provide infrastructure, tools, and resources hosted on the cloud, allowing teams to run automated tests at scale without the need for complex on-premise infrastructure.

Benefits:

- Scalability: Cloud platforms can scale up or down to accommodate large test suites, enabling quicker test execution.

- Cost-Efficiency: Teams can pay only for the resources they use, reducing the need for expensive on-premises hardware.

- Cross-Platform Testing: Cloud services often provide access to a variety of devices, browsers, and operating systems for testing.

- Collaboration: Teams can easily share test results and collaborate on improving test cases.

Popular Platforms:

- Sauce Labs: Cloud platform offering cross-browser and mobile testing on real devices.

- BrowserStack: Provides cloud-based access to real devices and browsers for testing.

- TestGrid: Supports both cloud-based testing and scalable automation.

7.4 The Impact of DevOps and Agile on QA Automation

DevOps and Agile methodologies have reshaped how software is developed, and testing is no exception. Both emphasize continuous integration and continuous delivery (CI/CD), making automation a crucial part of the software development pipeline.

DevOps Impact:

- Continuous Testing: Automated tests are continuously integrated into the pipeline, enabling rapid feedback and faster delivery.

- Infrastructure as Code (IaC): QA teams now use IaC tools (like Terraform) to automate the deployment of test environments.

Agile Impact:

- Iterative Testing: In Agile environments, testing is conducted continuously throughout the sprint. Automation is crucial to keep up with rapid iterations.

- Collaboration: Testers work closely with developers, business analysts, and product owners to create automated tests aligned with user stories and acceptance criteria.

7.5 Emerging Tools and Technologies in Automation Testing

The QA automation landscape is continuously evolving, and new tools and technologies are emerging to address complex testing needs. Some of the most exciting innovations include:

1. AI and Machine Learning:

- AI-Powered Test Automation: AI tools like Test.ai use machine learning to automatically identify UI elements and adapt to changes in the application without needing human intervention.

- Example: AI-driven tools can create tests based on user interactions or adapt to UI changes dynamically, improving test maintenance.

2. Blockchain Testing:

- As blockchain technology gains traction, new tools will emerge to test decentralized applications (dApps) and smart contracts.

- Example: Tools like Truffle and Ganache provide frameworks for automating tests on blockchain-based applications.

3. Autonomous Test Automation:

- Tools that integrate AI to fully automate the test creation, execution, and maintenance process.

- Example: mabl and Testim.io use AI to create and manage tests with minimal human input.

4. Robotic Process Automation (RPA):

- RPA tools like UiPath are being used for automating repetitive tasks that require human-like decision-making, improving test execution speed, and reducing manual efforts.

5. Quantum Computing for Testing:

- Quantum computing is in the early stages, but it may provide the ability to simulate complex scenarios and conduct high-speed testing of large datasets in the future.

Conclusion:

The future of QA automation is bright and filled with opportunities for enhanced efficiency and innovation. As new tools, approaches, and methodologies emerge, testing teams must adapt and embrace these changes. No-code platforms, Shift-Left and Shift-Right testing, cloud solutions, and DevOps practices will play an essential role in shaping the automation landscape. By staying agile and integrating the latest trends, organizations can continue to improve software quality while accelerating delivery.